认知建模是量化和理解人类心智过程的重要方法,但目前该方法多集中于简单实验任务和数据结构。在试图构建复杂模型以解释复杂认知过程时,能否确定其似然并完成参数推断成为一项严峻的挑战。基于神经网络的模拟推断方法结合了模拟推断与分摊技术,无需计算似然函数,直接利用模拟数据进行参数推断并通过神经网络训练控制计算成本,可快速稳健地进行参数推断。该方法已成功应用于证据积累模型框架下的大规模数据、动态潜变量以及联合建模等场景,并开始扩展到强化学习和贝叶斯决策模型。未来的研究可以进一步验证神经模拟推断的有效性,运用该方法扩大认知模型的应用范围,推动理论与模型发展,增进对人类复杂认知加工规律的理解。

Abstract

Cognitive computational modeling quantifies human mental processes using mathematical frameworks, thereby translating cognitive theories into testable hypotheses. Modern cognitive modeling involves four interconnected stages: defining models by formalizing symbolic theories into generative computational frameworks, collecting data through hypothesis-driven experiments, inferring parameters to quantify cognitive processes, and evaluating or comparing models. Parameter inference, a critical step that facilitates the integration of models and data, has traditionally relied on maximum likelihood estimation (MLE) and Bayesian methods like Markov Chain Monte Carlo (MCMC). These approaches depend on explicit likelihood functions, which become computationally intractable for complex models—such as those with nonlinear parameters (e.g., learning dynamics) or hierarchical/multimodal data structures.

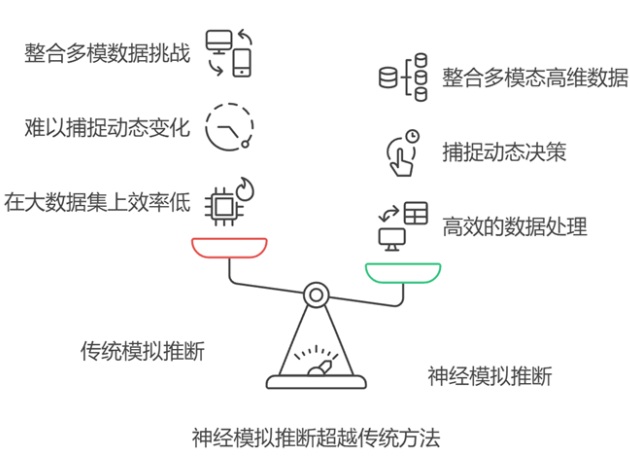

To address these challenges, simulation-based inference (SBI) emerged, leveraging parameter-data mappings via simulations to bypass likelihood calculations. Early SBI methods, however, faced computational redundancy and scalability limitations. Recent advances in neural simulation-based inference (NSBI), or neural amortized inference (NAI), harness neural networks to pretrain parameter-data relationships, enabling rapid posterior estimation.

Despite its advantages, NSBI remains underutilized in psychology due to technical complexity. This work focuses on neural posterior estimation, one of three NSBI approaches alongside neural likelihood estimation and neural model comparison. Neural posterior estimation operates in two phases: training and inference. During the training phase, parameters are sampled from prior distributions, and synthetic data are generated using the model; a neural network is then trained to approximate the true posterior from these training pairs. In the inference stage, real data are input to the trained network to generate parameter samples. The BayesFlow framework enhances neural posterior estimation by integrating normalizing flows—flexible density estimators—and summary statistic networks, enabling variable-length data handling and unsupervised posterior approximation. Its GPU-accelerated implementation further boosts efficiency.

Neural posterior estimation has expanded the scope of evidence accumulation models (EAMs), one of the most widely used framework in cognitive modeling. First, it enables large-scale behavioral analyses, as demonstrated by von Krause et al. (2022), who applied neural posterior estimation to drift-diffusion models (DDMs) for 1.2 million implicit association test participants. By modeling condition-dependent drift rates and decision thresholds, they revealed age-related nonlinear cognitive speed changes, peaking at age 30 and declining post-60. Neural posterior estimation completed inference in 24 hours versus MCMC’s 50+ hours for a small subset, demonstrating its scalability.

Second, neural posterior estimation supports dynamic decision-making frameworks, exemplified by Schumacher et al. (2023), who combined high-level dynamics with low-level mechanisms using recurrent neural networks (RNNs). Their simultaneous estimation of hierarchical parameters achieved over 0.9 recovery correlations and superior predictive accuracy compared to static models.

Finally, neural posterior estimation facilitates neurocognitive integration, as shown by Ghaderi-Kangavari et al. (2023), who linked single-trial EEG components (e.g., CPP slope) to behavior via shared latent variables like drift rate. This approach circumvented intractable likelihoods and revealed associations between CPP slope and non-decision time.

NSBI enhances cognitive modeling by enabling efficient analysis of complex, high-dimensional datasets. Its key limitations include model validity risks (biased estimates from incorrect generative assumptions), overfitting concerns (overconfident posteriors on novel data), and upfront training costs for amortized methods. Future work should refine validity checks—such as detecting model misspecification—and develop hybrid inference techniques. NSBI’s potential extends to computational psychiatry and educational psychology, promising deeper insights into cognition across domains. By addressing complexity barriers, NSBI could democratize advanced modeling for interdisciplinary research, advancing our understanding of human cognition through scalable, data-driven frameworks.

关键词

认知建模 /

生成模型 /

贝叶斯 /

基于模拟的推断 /

基于神经网络的模拟推断

Key words

cognitive modeling /

generative models /

bayesian /

simulation-based inference /

neural network /

neural amortized bayesian inference

{{custom_sec.title}}

{{custom_sec.title}}

{{custom_sec.content}}

参考文献

[1] 刘逸康, 胡传鹏. (2024). 证据积累模型的行为与认知神经证据. 科学通报, 69(8), 1068-1081.

[2] 郭鸣谦, 潘晚坷, 胡传鹏. (2024). 认知建模中模型比较的方法. 心理科学进展, 32(10), 1736.

[3] Ahn W. Y., Haines N., & Zhang L. (2017). Revealing neurocomputational mechanisms of reinforcement learning and decision-making with the hBayesDM package. Computational Psychiatry, 1, 24-24.

[4] Beaumont, M. A. (2010). Approximate bayesian computation in evolution and ecology. Annual Review of Ecology, Evolution, and Systematics, 41(1), 379-406.

[5] Bhattacharya A., Sarkar B., & Mukherjee S. K. (2007). Distance-based consensus method for ABC analysis. International Journal of Production Research, 45(15), 3405-3420.

[6] Boag R. J., Innes R., Stevenson N., Bahg G., Busemeyer J. R., Cox G. E., & Forstmann B. (2024). An expert guide to planning experimental tasks for evidence accumulation modelling. PsyArXiv.

[7] Boelts J., Lueckmann J. M., Gao R., & Macke J. H. (2022). Flexible and efficient simulation-based inference for models of decision-making. eLife, 11, e77220.

[8] Brosnan M. B., Sabaroedin K., Silk T., Genc S., Newman D. P., Loughnane G. M., & Bellgrove M. A. (2020). Evidence accumulation during perceptual decisions in humans varies as a function of dorsal frontoparietal organization. Nature Human Behaviour, 4(8), Article 8.

[9] Bürkner P. C., Scholz M., & Radev S. T. (2023). Some models are useful, but how do we know which ones? Towards a unified Bayesian model taxonomy. Statistics Surveys, 17, 216-310.

[10] Cannon P., Ward D., & Schmon S. M. (2022). Investigating the impact of model misspecification in neural simulation-based inference. ArXiv.

[11] Churchland, P. S., & Sejnowski, T. J. (1988). Perspectives on cognitive neuroscience. Science, 242(4879), 741-745.

[12] Cranmer K., Brehmer J., & Louppe G. (2020). The frontier of simulation-based inference. Proceedings of the National Academy of Sciences, 117(48), 30055-30062.

[13] Eaton N. R., Bringmann L. F., Elmer T., Fried E. I., Forbes M. K., Greene A. L., & Waszczuk M. A. (2023). A review of approaches and models in psychopathology conceptualization research. Nature Reviews Psychology, 2(10), Article 10.

[14] Elsemüller L., Schnuerch M., Bürkner P. C., & Radev S. T. (2023). A deep learning method for comparing bayesian hierarchical models. ArXiv.

[15] Fengler A., Govindarajan L. N., Chen T., & Frank M. J. (2021). Likelihood approximation networks (LANs) for fast inference of simulation models in cognitive neuroscience. eLife, 10, e65074.

[16] Frazier D. T., Robert C. P., & Rousseau J. (2020). Model misspecification in approximate bayesian computation: Consequences and diagnostics. Journal of the Royal Statistical Society Series B: Statistical Methodology, 82(2), 421-444.

[17] Geng H., Chen J., Chuan-Peng H., Jin J., Chan R. C. K., Li Y., Hu X., Zhang R. Y., & Zhang L. (2022). Promoting computational psychiatry in China. Nature Human Behaviour, 6(5), 615-617.

[18] Ghaderi-Kangavari A., Rad J. A., & Nunez M. D. (2023). A general integrative neurocognitive modeling framework to jointly describe EEG and decision-making on single trials. Computational Brain and Behavior, 6(3), 317-376.

[19] Glickman M., Moran R., & Usher M. (2022). Evidence integration and decision confidence are modulated by stimulus consistency. Nature Human Behaviour, 6(7), 988-999.

[20] Greenberg D., Nonnenmacher M., & Macke J. (2019). Automatic posterior transformation for likelihood-free inference. International Conference on Machine Learning, abs, 2404-2414.

[21] Guest, O., & Martin, A. E. (2021). How computational modeling can force theory building in psychological science. Perspectives on Psychological Science, 16(4), 789-802.

[22] Habermann D., Schmitt M., Kühmichel L., Bulling A., Radev S. T., & Bürkner P. C. (2024). Amortized bayesian multilevel models. ArXiv.

[23] Hato T., Schumacher L., Radev S. T., & Voss A. (2024). Lévy versus wiener: Assessing the effects of model misspecification on diffusion model parameters. Research Square.

[24] Hedge C., Powell G., & Sumner P. (2018). The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behavior Research Methods, 50(3), 1166-1186.

[25] Hermans J., Delaunoy A., Rozet F., Wehenkel A., Begy V., & Louppe G. (2022). A trust crisis in simulation-based inference? Your posterior approximations can be unfaithful. ArXiv.

[26] Krajbich, I. (2019). Accounting for attention in sequential sampling models of decision making. Current Opinion in Psychology, 29, 6-11.

[27] Kriegeskorte, N., & Douglas, P. K. (2018). Cognitive computational neuroscience. Nature Neuroscience, 21(9), 1148-1160.

[28] Kucina T., Wells L., Lewis I., De Salas K., Kohl A., Palmer M. A., & Heathcote A. (2023). Calibration of cognitive tests to address the reliability paradox for decision-conflict tasks. Nature Communications, 14(1), 2234.

[29] McClelland, J. L. (2009). The place of modeling in cognitive science. Topics in Cognitive Science, 1(1), 11-38.

[30] Miletić S., Boag R. J., Trutti A. C., Stevenson N., Forstmann B. U., & Heathcote A. (2021). A new model of decision processing in instrumental learning tasks. eLife, 10, 1-55.

[31] Nunez M. D., Gosai A., Vandekerckhove J., & Srinivasan R. (2019). The latency of a visual evoked potential tracks the onset of decision making. NeuroImage, 197, 93-108.

[32] Nunez M. D., Vandekerckhove J., & Srinivasan R. (2017). How attention influences perceptual decision making: Single-trial EEG correlates of drift-diffusion model parameters. Journal of Mathematical Psychology, 76(1), 117-130.

[33] O’Connell, R. G., & Kelly, S. P. (2021). Neurophysiology of human perceptual decision-making. Annual Review of Neuroscience, 44(1), 495-516.

[34] Palestro J. J., Sederberg P. B., Osth A. F., Van Zandt, T., & Turner, B. M. (2018). Likelihood-free methods for cognitive science. Springer International Publishing..

[35] Papamakarios, G., & Murray, I. (2016). Fast ε-free inference of simulation models with bayesian conditional density estimation. In D. Lee, M. Sugiyama, U. Luxburg, I. Guyon, & R. Garnett (Eds.), Advances in neural information processing systems (pp. 1036-1044). Curran Associates, Inc.

[36] Pedersen M. L., Frank M. J., & Biele G. (2017). The drift diffusion model as the choice rule in reinforcement learning. Psychonomic Bulletin and Review, 24(4), 1234-1251.

[37] Radev S. T., D' Alessandro M., Mertens U. K., Voss A., Kothe U., & Burkner P. C. (2021). Amortized bayesian model comparison with evidential deep learning. IEEE Transactions on Neural Networks and Learning Systems, 34(8), 4903-4917.

[38] Radev S. T., Mertens U. K., Voss A., Ardizzone L., & Kothe U. (2022). BayesFlow: Learning complex stochastic models with invertible neural networks. IEEE Transactions on Neural Networks and Learning Systems, 33(4), 1452-1466.

[39] Radev S. T., Schmitt M., Pratz V., Picchini U., Köthe U., & Bürkner P. C. (2023). JANA: Jointly amortized neural approximation of complex bayesian models. Uncertainty in Artificial Intelligence, 1695-1706.

[40] Radev S. T., Schmitt M., Schumacher L., Elsemüller L., Pratz V., Schälte Y., Köthe U., & Bürkner P. C. (2023). BayesFlow: Amortized bayesian workflows with neural networks. Journal of Open Source Software, 8(89), 5702.

[41] Ratcliff R., Schmiedek F., & McKoon G. (2008). A diffusion model explanation of the worst performance rule for reaction tune and IQ. Intelligence, 36(1), 10-17.

[42] Rmus M., Pan T. F., Xia L., & Collins, A. G. E. (2024). Artificial neural networks for model identification and parameter estimation in computational cognitive models. PLoS Computational Biology, 20(5), e1012119.

[43] Schad D. J., Betancourt M., & Vasishth S. (2021). Toward a principled Bayesian workflow in cognitive science. Psychological Methods, 26(1), 103-126.

[44] Schumacher L., Bürkner P. C., Voss A., Köthe U., & Radev S. T. (2023). Neural superstatistics for bayesian estimation of dynamic cognitive models. Scientific Reports, 13(1), 13778.

[45] Schumacher L., Schnuerch M., Voss A., & Radev S. T. (2025). Validation and comparison of non-stationary cognitive models: A diffusion model application. Computational Brain and Behavior, 8(2), 191-210

[46] Straub D., Niehues T. F., Peters J., & Rothkopf C. A. (2025). Inverse decision-making using neural amortized bayesian actors. ArXiv.

[47] Tsetsos K., Gao J., McClelland J. L., & Usher M. (2012). Using time-varying evidence to test models of decision dynamics: Bounded diffusion vs. the leaky competing accumulator model. Frontiers in Neuroscience, 6, 79.

[48] van Geen, C., & Gerraty, R. T. (2021). Hierarchical bayesian models of reinforcement learning: Introduction and comparison to alternative methods. Journal of Mathematical Psychology, 105, 102602.

[49] von Krause M., Radev S. T., & Voss A. (2022). Mental speed is high until age 60 as revealed by analysis of over a million participants. Nature Human Behaviour, 6(5), 700-708.

[50] Voskuilen C., Ratcliff R., & Smith P. L. (2016). Comparing fixed and collapsing boundary versions of the diffusion model. Journal of Mathematical Psychology, 73, 59-79.

[51] Wang Z., Solloway T., Shiffrin R. M., & Busemeyer J. R. (2014). Context effects produced by question orders reveal quantum nature of human judgments. Proceedings of the National Academy of Sciences, 111(26), 9431-9436.

[52] Wilson, R. C., & Collins, A. G. (2019). Ten simple rules for the computational modeling of behavioral data. eLife, 8, e49547.

[53] Wu Y., Radev S., & Tuerlinckx F. (2024). Testing and improving the robustness of amortized bayesian inference for cognitive models. ArXiv.

[54] Zammit-Mangion A., Sainsbury-Dale M., & Huser R. (2024). Neural methods for amortized inference. ArXiv.

基金

*本研究得到国家自然科学基金项目(32471097)的资助

PDF(1081 KB)

PDF(1081 KB)

PDF(1081 KB)

PDF(1081 KB)

PDF(1081 KB)

PDF(1081 KB)